Back in January 2020, Shopify publicly announced its adoption of React Native. This meant from that point forward, all of our new mobile apps would be built with the React Native framework. By extension, this also meant a new learning opportunity for our accessibility team, in testing apps and guiding design and development teams to create accessible user experiences.

Fast forward a few months later, when a handful of talented volunteers from Shopify would start working on a new React Native app called COVID Shield: a COVID-19 exposure notification solution. While the team was working on COVID Shield, I was brought on to conduct accessibility testing as development was taking place.

This post includes details on how I tested COVID Shield for accessibility, closely reviewing a few key issues, and the related solutions to those issues. Hopefully, this advice will help you test your own apps to ensure you're creating an accessible experience for all your users.

Before we dive into those details, it's worth noting COVID Shield was adopted by the Canadian federal government to provide Canadian citizens a notification method for potential exposure to COVID-19. The app was rebranded as COVID Alert. Future development was taken over by the Canadian Digital Services team. Now, let's jump in.

Test environment

Since the COVID Shield app was in active development, my testing environment included a few tools other than real-world, physical devices:

- iOS simulator paired with macOS Accessibility Inspector

- iOS simulator paired with macOS VoiceOver

- Android emulator paired with TalkBack screen reader

- Physical Android device with a snapshot of the COVID Shield

apkinstalled

For a full understanding of how to use these tools to test app accessibility, you can read my post Mobile Screen Reader Testing.

React Native Accessibility API

Facebook has made sure app developers are able to create accessible and inclusive user experiences by means of the React Native Accessibility API. This was my go-to resource when it came to making any recommendations to the team.

This API includes a series of React methods and props to provide information like roles, names, and state to interactive elements. It also includes other items to increase general accessibility of an app while using assistive technology.

If you're at all familiar with HTML, the DOM, or the ARIA spec, then you've got a good start on using the Accessibility API. Concepts such as adding a role to an element to provide semantic meaning, setting a label on a control via aria-label, or hiding something completely with aria-hidden are all possible.

Adding semantics: role, name, state

Let's start with the concept of adding semantic meaning to an element: role, name, and state.

When developing for the web, authors have native "clickable" elements with which to work; button and link elements. A button is typically used to submit a form or perform an on-screen action, such as launching a modal window. Links are used for requesting new data or shifting focus from one point of the screen to another. These elements come with their respective semantics, shared via their role, name, and state (if applicable.)

Clickable elements in React Native

In my experience with the COVID Shield app, the team decided to make the app appear more native for each platform by way of a custom Button component. This component included some logic to generate platform-specific touch controls. For example:

- iOS devices would receive the

TouchableOpacitycomponent - Android devices used the Ripple component, imported from the

react-native-material-ripplepackage

if (Platform.OS === 'android') {

return (

<Ripple onPress={onPressHandler} …>

{content}

</Ripple>

);

}

return (

<TouchableOpacity onPress={onPressHandler} …>

{content}

</TouchableOpacity>

);

The issue I found with these components was they did not include a role to help convey the purpose of the clickable element. Without the information of what this element is, the user may not understand what may happen when the element is interacted with. We want to help our users not only be successful when using our apps, but also to be confident when doing so.

"We want to help our users not only be successful when using our apps, but also to be confident when doing so."

Adding a role

While I was testing COVID Shield with a screen reader on either iOS or Android, I noticed each clickable/interactive control was missing a role description. The screen reader would stop on the control and only announce its name, if one existed. As a sighted user, I had the visual affordance; that is, the design of the button to indicate it was clickable control. But as a screen reader user, this information was not shared, which may lead to a sense of confusion or frustration.

In React Native, adding a role to provide context on the current element is a matter of adding the accessibilityRole prop to the component which receives the click event. This prop takes a string value which is defined in the API, one of which is the value of "button," denoting an on-screen action will result upon activation.

<TouchableOpacity

accessibilityRole="button"

…

>

Enter code

</TouchableOpacity>

In HTML, this is similar to adding the role attribute to an element and assigning it the value of "button."

- 🗣 "Enter code"

- After:

- 🗣 "Enter code, button"

For example, the screenshot above shows COVID Shield with a visually-styled button with the name, "Enter code." Without the explicit role declaration, there was only a "blip" sound when the control came into focus by the screen reader. Nothing more was shared to indicate what the element actually was.

After we applied the accessibilityRole prop with the appropriate "button" value, the control was then described as, "Enter code, button."

Again, the purpose of this is to alert the user about what it is they're currently focused on. The "button" aural description provides a clue to what might happen upon interaction, which in this case was loading a new view onto the screen to input a code.

Add a name

There were a few clickable elements in the app which only used icons to provide a visual affordance. Not only were these controls missing their role, they were also missing an accessible name to provide details on what they were meant for.

While testing, the screen reader would stop on the control and not announce anything. Again, as a sighted user I had the visual affordance of the icon indicating the control's purpose. But without a role and name, a screen reader user would experience a seemingly unnecessary tab-stop.

"Again, as a sighted user I had the visual affordance of the icon indicating the control's purpose. But without a role and name, a screen reader user would experience a seemingly unnecessary tab-stop."

In React Native, adding a name to provide a sense of purpose for the current element the user is interacting with is a matter of including the accessibilityLabel prop. This prop takes a string value which is defined by the author, so be sure to include something that's appropriate for the context of the control.

<TouchableOpacity

accessibilityLabel="Close"

accessibilityRole="button"

…

>

<!-- Icon… -->

</TouchableOpacity>

In HTML, this is similar to adding the aria-label attribute to an element and assigning it an accessible name for screen readers to announce.

Before:

- 🗣 "…" 🤷♂️

- After:

- 🗣 "Close, button"

In the screenshot above, I've highlighted an icon control with a downward pointing arrow. This is meant as an indicator that this portion of the screen is collapsable. However, since there was no label, or role, the screen reader would stop on the control and not provide any more information.

After adding the explicit name and role via accessibilityRole and accessibilityLabel props, the control would be announced as, "Close, button." With this, the user would have an understanding of what the control's purpose was and be confident on the end result upon activation.

Add state

Around the COVID Shield app there were instances where controls were in a specific state. In one view, there was a list of checked or unchecked items. In another, there was a form with the "submit" control in a disabled state by default. I knew these things because as a sighted user, they were communicated to me via visual affordance of the design.

A screen reader user, however, would not be able to acquire such information via the aural user experience. Nothing was added programmatically to provide information on the control's current state.

In React Native, providing the state of the element is a matter of including the accessibilityState prop. This prop takes an object whose definition and allowed values are defined by the API.

For example, setting a control as "disabled" is a matter of assigning the accessibilityState prop with an object key name of disabled and setting its value to true.

<TouchableOpacity

accessibilityRole="button"

accessibilityState={disabled: true}

…

>

Submit code

</TouchableOpacity>

In HTML, this would be similar to adding one of the ARIA state attributes. For example, aria-disabled conveys a disabled state of a form control. Or aria-selected conveys the selected state of a tab control.

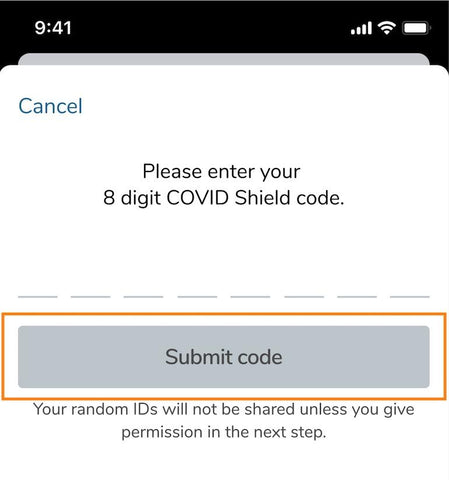

Before:

- 🗣 "Submit code"

- After:

- 🗣 "Submit code, dimmed, button"

In the screenshot above, I've highlighted a form submit control with the label, "Submit code." It's visually greyed out indicating its disabled state. However, since there was no programmatic state provided, the screen reader would stop on the control and announce its name only.

After adding the explicit state object and role, the control would be announced as, "Submit code, dimmed, button." And, in this particular example, the word "dimmed" is unique to iOS. Android describes this state as "disabled."

Headings

Headings are typically large, bold text that denote the title of a page or a new section of content. When testing COVID Shield I noticed there were instances of visible heading text throughout the app, usually at the top of a new view. In essence, the design visually conveyed the presence and structure of a heading, but the aural experience did not.

You may be asking, "Why is this important?"

The answer is that people who depend on assistive technology often navigate by headings first.

"People who depend on assistive technology often navigate by headings first."

When a screen reader user visits a new site they've never visited before, they'll often navigate by headings first. Screen readers feature the ability to allow the user to navigate by a specific type of content: links, buttons, headings, images, tables, lists, etc.

Specifically, people navigate by headings first in order to quickly get a sense of the content being offered on the page. It's the same idea as someone scanning through and reading the headings of a newspaper or a blog post. The idea is to gather the general sense of the content available, then revisit the sections of interest.

Adding a heading

We can indicate a heading element by adding the accessibilityRole prop to the component which contains the heading text. According to the React Native accessibility API, the string value of "header" should be supplied to the accessibilityRole prop.

<Text

…

accessibilityRole="header"

>

Share your random IDs

</Text>

In HTML, this is similar to adding the role="heading" attribute to a text element, but it'd be best to use one of the native heading elements instead. It's also interesting that in React Native it's not possible to assign a heading level. In HTML we have the h1 through h6 heading elements to indicate the heading level and logical structure of the content. But with React Native, it's strictly a heading only.

Before:

- 🗣 "Share your random IDs"

- After:

- 🗣 "Share your random IDs, heading"

For the example here, the screenshot shows the COVID Shield view with a visually styled text heading with the content, "Share your random IDs." Without the explicit heading declaration, the screen reader would read the content as plain text. This isn't the worst user experience, but it's also not conveying the same information a sighted user would receive: large, bold typography indicating a new section of content.

After adding the accessibilityRole prop with the appropriate "header" value, the text was then described as, "Share your random IDs, heading."

Again, not only is the aural user experience describing the text as a heading denoting a new section of content, screen reader users can also navigate via headings alone in order to gain understanding of the content on the page as a whole.

This is a good example of a quick accessibility win: low effort resulting in high impact.

Hint text

Hint text is meant to provide additional information that is visually hidden from sighted users. For example, if there's a visual indicator, such as an icon that conveys meaning to sighted users, we also need to pass these details along for folks who may not be able to see the visual hint.

This situation came up while testing COVID Shield when a couple items in the main menu would open the device web browser instead of loading a new view inside the app.

When a new browser window opens on click, let the user know. Give power to the user—let them decide how and when they'd like to proceed.

"When a new browser window opens on click, let the user know. Give power to the user—let them decide how and when they'd like to proceed."

This scenario is also quite common on the web. The idea is if a link opens a new browser window, or if the app takes the user out of the current app, it's best practice to inform the user of the end result.

Why is this important? Without this context, people might believe they're following an internal site link which loads in the same browser window. If the user is unprepared to move away from the current site, they'd need to put in the effort to switch back to the previous tab or app.

The idea is to give power to the user; inform the user of what might happen upon interaction in order to allow a decision to be made on how and when they'd like to proceed.

Including hint text

We can include hint text by adding the accessibilityHint prop to the component which when activated, results in the new context being opened. This prop takes a string value which is defined by the author, so be sure to include something that's appropriate for the context of the control. Typically, something along the lines of, "opens in a new window" provides the context required to alert the user.

<TouchableOpacity

…

accessibilityHint="Opens in a new window"

accessibilityRole="link"

>

Check symptoms

</TouchableOpacity>

In HTML, this is similar to adding the upcoming-but-not-available-yet aria-description attribute.

Before:

- 🗣 "Check symptoms"

- After:

- 🗣 "Check symptoms, opens in a new window, link"

For this example, the screenshot shows the COVID Shield menu highlighting a clickable control with the content, "Check symptoms." Beside the text is an arrow icon pointing up and to the right. The intention here is to provide a visual indication of the activation result: leaving the app and entering a different context.

Without the accessibilityHint prop, the control simply read, "Check symptoms."

After adding the accessibilityHint (and accessibilityRole) prop with the hint text value, the control was then described as, "Check symptoms, opens in a new window, link."

Not only is the visual user experience increased by the icon, the aural user experience is also enhanced by sharing the meaning behind the icon. As a result, all users will be able to make an informed decision if and when to activate the link, either now or later when they're ready.

Focus management

Focus management is a method of willfully and purposefully shifting the keyboard focus cursor from one element to another on behalf of the user. This technique is sometimes required to guide the user through the intended flow of the app. Focus management should only be used when absolutely necessary as to not create more work for the user when an unexpected shift in focus has occurred.

For example, when opening a modal window, focus must be placed on or inside of the modal window in order to bring context awareness to the user. Otherwise, focus remains on the activator control and the user may not be aware of or be able to easily reach the modal content.

App view focus management

In terms of React Native and single-page apps in general, a critical accessibility issue lies in managing focus between views.

In a traditional browser environment, the user would click a link and a full page refresh would occur. At this point the user's focus would be placed at the top of the document, allowing the user to discover content organically from a top-to-bottom fashion.

With React Native and other single page app environments, this is not the case. When a new view is loaded onto the screen, sighted users are presented with the new content. However, focus remains on the previous activator control. The problem here is:

- There's no notification provided to screen reader users of a successful view update. Focus remains on the control they previously clicked.

- This would result in a confusing user experience where the user may question the quality of the app, or question themselves if they'd done something incorrect.

When the user does decide to move their cursor, there's no telling where it may end up.

How do we handle managing focus between one view to the next? There are a few different approaches you could take, two of which include:

- Shifting focus to the new view container, allowing the user to navigate forward as if a full refresh had taken place

- Shifting focus to the top level heading element, providing a confirmation of view change and allowing the user to move forward from this point

These are a couple of potential solutions. But instead of speculating, let's review some data from a study conducted in 2019.

Data driven design

The study was called, What we learned from user testing of accessible client-side routing techniques with Fable Tech Labs. It was conducted by Marcy Sutton, an independent web developer and accessibility subject matter expert, in collaboration with Fable Tech Labs, a web accessibility crowdtesting service.

The purpose of the study was to find out which focus management approach rendered the best, most positive user experience for a number of different disability user groups using various assistive technologies.

Specifically, the study included:

- Sighted keyboard-only users

- Screen reader users

- Low-vision zoom software users

- Voice dictation users

- Switch access users

All of these user groups have a unique set of requirements and expectations of what may be deemed a successful user experience.

While the study focuses on JavaScript-based single page apps, the concept can still be applied to React Native apps.

You should definitely read through this post when you have a few minutes, but I'll jump to the conclusion as to what was considered a good solution for some, but not necessarily the best solution for all.

Shift focus to a heading

Tl;dr: shift focus to a heading.

"Tl;dr: shift focus to a heading."

Shifting focus to a heading element was one of the more successful solutions that worked well for most user groups. This solution is ideal as it provides screen reader users with a clear indication of a new view load by way of announcing the heading text. This announcement would imply new content is available for consumption.

For voice dictation, keyboard-only, and zoom users, shifting focus to the heading orients the user to the new starting point in the app. Ideally when the heading is in focus it would include some sort of visual indicator, such as a focus outline. If no outline is present, some sighted assistive technology users may have a more difficult time understanding where their cursor is currently focused.

Focusing on a heading in React Native

How do we shift focus to a heading in React Native? Good question—and one, I'm afraid, I don't have a good answer for.

This is one of the issues I reported to recommend moving focus to the view heading on load. Unfortunately the COVID Shield team didn't have time to address this issue.

Instead, we can review what the Canadian Digital Services team implemented for Covid Alert app.

<Text

…

accessibilityRole="header"

accessibilityAutoFocus

>

Share your random IDs

</Text>

Reviewing the COVID Alert app source on GitHub, the CDS team created their own accessibilityAutoFocus prop. This prop is placed on the heading Text component. When the view loads, focus shifts to the heading.

In this example, the screenshot shows the Covid Alert app running on an Android phone. The heading text, "Enter your one-time key" is highlighted with a green border from the TalkBack screen reader, indicating the text has focus and is announced when the view loads. With this prop in place, focus is well managed between views, and users of assistive technology are in a better position to be successful.

This is one example solution. There could be others available, such as a third-party component you could incorporate into your project. Or perhaps, depending on how your project is structured, you could simplify things by using a React ref with the componentDidMount lifecycle method to shift focus when the view is fully loaded. But be sure to check out the accessibilityAutoFocus solution on GitHub from the COVID Alert app. It works well.

Accessibility is more than screen readers

Everything we've discussed today has mostly catered to the screen reader user experience. And while addressing issues for screen readers does help to remove barriers for other assistive technologies, such as keyboard-only and voice dictations users, it shouldn't be the only focus.

iOS and Android have many other accessibility features built in. Here are a few other areas to try out the next time you're testing for accessibility:

- Font size: Make sure your app's text is able to be dynamically adjusted according to the operating system settings. This helps low vision users read content with ease.

- Color inversion: This also helps people with various visual impairments. Test to make sure your content is still legible when colors are inverted.

-

Reduce motion: Use animation sparingly and with purpose. Some people prefer little to no motion as they may be prone to motion sickness or other vestibular issues. Use the

prefers-reduced-motionCSS media query to take advantage of this operating system setting.

These are just a few settings available. I encourage you to explore each setting and learn how you can adjust your apps to be more dynamic in order to be inclusive for your user's needs.

Resources

- React Native Accessibility API

- COVID Shield Accessibility Issues

- Google Web Fundamentals: Accessibility

- Google Chrome Developers: A11ycasts with Rob Dodson

- Web.dev: Accessible to all

- Egghead.io: Start Building Accessible Web Applications Today

- Smashing Magazine: How A Screen Reader User Surfs The Web